Queplix Corp. is bringing its QueCloud data virtualization, integration and management technologies to the Salesforce.com AppExchange. QueCloud will let Salesforce.com users quickly and securely integrate with other leading SaaS and on-premise applications from SAP, Oracle and NetSuite.

Queplix Corp. is bringing its QueCloud data virtualization, integration and management technologies to the Salesforce.com AppExchange. QueCloud will let Salesforce.com users quickly and securely integrate with other leading SaaS and on-premise applications from SAP, Oracle and NetSuite.

Queplix Corp. is bringing its QueCloud data virtualization, integration and management technologies to the Salesforce.com AppExchange. QueCloud will let Salesforce.com users quickly and securely integrate with other leading SaaS and on-premise applications from SAP, Oracle and NetSuite.Queplix’s QueCloud uses data virtualization and metadata to simplify integration so users can simply select the data they want to integrate, share or align with Salesforce.

The company’s data virtualization and data management approach is designed to avoid costly and complicated ETL (extract, transform and load), and simplify data sharing and integration across multiple applications by eliminating programming, wire diagrams or even SQL.

“A lot of middleware and integration technologies remain glorified ETL tools to cope with a growing number of endpoints and connectors,” Queplix’s CTO, Steve Yaskin, told IDN. “On the whole, many [integration] solution providers are still largely just laying pipe or using ETL, stored procedures or working with SQL commands.”

After integration, Queplix’s QueCloud operations and automation will maintain data harmonization across the different applications, without the need for programming or manual data diagrams typically associated with traditional ETL technology.

Yaskin is a veteran of many point-to-point and ETL-based integration architectures, having worked for leading system integrators such as Accenture and Cap Gemini prior to joining Queplix as CTO.

Queplix created QueCloud with the premise that long-standing ETL technologies are about to hit a breaking point, driven by a skyrocketing growth of web-based and cloud-based SaaS and data sources, Yaskin told IDN.

“Companies are now, or soon will be, where the number of [integration] connectors is just becoming unmanageable. So, laying pipe with ETL just won’t scale to meet these newer architectures,” Yaskin said.

Queplix and QueCloud avoid laying pipe altogether by using data virtualization and metadata technologies. “Our simple idea is if we had more data about the data we need, we’d be able to make integration simpler because we would have accumulated more knowledge about how to get just the data we need to the right applications and end points,” Yaskin added.

“With our persistent metadata server as the central location we can reach out to multiple sources [and targets] without pipes.”

Steve Yaskin

CTO

Queplix Corp.

CTO

Queplix Corp.

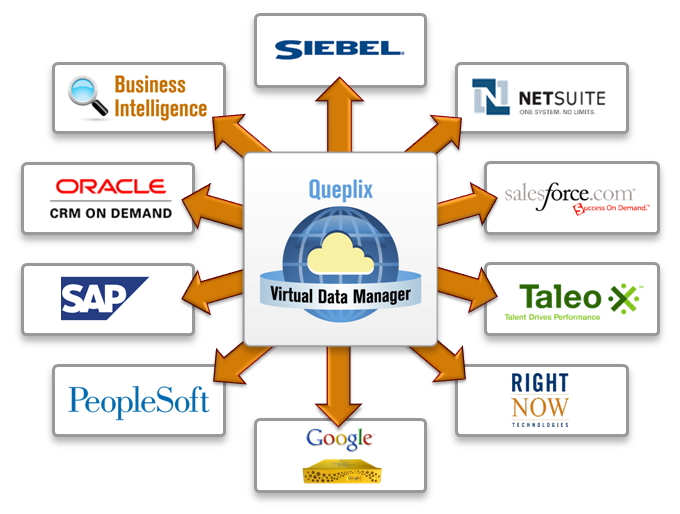

Queplix differs from ETL approaches in that it uses a persistent metadata server to provide end point and data integration in a hub and spoke architecture. “With our persistent metadata server as the central location [for data integration] we can reach out to multiple sources [and targets] without pipes,” Yaskin said.

Queplix does not move data nor create data marts – rather it “interrogates” enterprise data leaving it right where it resides. To do this, the company uses a series of intelligent application software blades that identify and extract key data and associated security information from many key enterprise apps. The blades contain software that “crawls” all data sources to identify the metadata and other information about the data.

“We extract metadata from the [data] and then build a metadata catalogue that describes all those objects into an object model, not a relational model,” Yaskin said.

From that, Queplix constructs a centralized virtual store (or what Queplix calls “a virtual catalogue”), in which all the data (and associated information) can be stored as objects. “This means a wide range of information can be presented as an object – data, tables, XML, SOAP, whatever – everything becomes an object in a virtual or metadata catalogue location.”

At runtime, the data virtualization-driven integration is accomplished via the Queplix Engine. Once integrated, Queplix also has offerings to keep data consistent between applications with its data harmonization automated data synchronization process.

So, for example, Queplix can connect to Oracle DBMS with 5,000-10,000 tables and what comes out is a set of business objects, represented by metadata, Yaskin explained. “We have an abstraction layer, which creates the virtual catalogue. This lets you integrate or transform just the data you want and put it where you want it – without the need to move any data over pipes,” he added. “So, when you want information about your customer, we have an object called ‘customer’ that you can share with other on-premise or cloud applications, or even use to make sure all fields in different applications are consistent.”

Queplix can work with relational, object and other structured data, as well as unstructured or semi-structured data, extract metadata and create XML structures and virtual mappings, he added. Within Queplix, this virtual mapping achieves integration by merging the metadata objects, which eliminates the need for manual data mapping tasks, especially for mapping fields, which can be required of many MDM projects.

“Queplix does not require data owners to make any changes to the way they handle data because our blades contain all the required metadata they need to interrogate their data sources and extract the metadata we need,” Yaskin said.

As a result of Queplix’s approach to data virtualization, metadata capture and automation, companies can reduce the lifecycle total cost of ownership for such data integration and management by up to 75%, compared with ETL tools, he added.

"Apps like these continue to push the social, open, mobile and trusted capabilities customers expect from the salesforce.com ecosystem,” said Ron Huddleston, Salesforce vice president for ISV Alliances, in a statement.